About me

I’m currently taking a few months to explore entrepreneurship. Maybe I’ll come up with something useful; I’ll certainly learn along the way. I’ll try to document what I learn each month during this journey.

Before that, I was a PostDoc at ETH Zürich, working on automated design with Mark Fuge. My research areas: Multi-Objective Optimization, Reinforcement Learning, and Benchmarking.

… Before that, I was a PhD Student in the PCOG group at the University of Luxembourg, under the supervision of Grégoire Danoy, where I did a thesis on Multi-Objective RL. And won several awards from FNR, and from the University of Luxembourg.

… and even before that I was a tech lead at N-SIDE, a company specialized in optimization software.

This is my PhD thesis in a nutshell.

Aside from the cool things mentionned above, I am into cinema, cycling, and beers (yes, I am Belgian).

Most significant publications

- EngiBench: A Framework for Data-Driven Engineering Design Research: NeurIPS (2025).

- Multi-Objective Reinforcement Learning Based on Decomposition: A Taxonomy and Framework: JAIR (2024).

- Toolkit for MORL: NeurIPS (2023).

Open source

I have been quite involved in open source stuffs over the past few years. And I’m quite proud to say the amazing tools we’ve built have been downloaded several hundreds of thousands of times. I’m a member of the Farama Foundation, a non-profit organization aiming at facilitating the development of open-source tools for Reinforcement Learning.

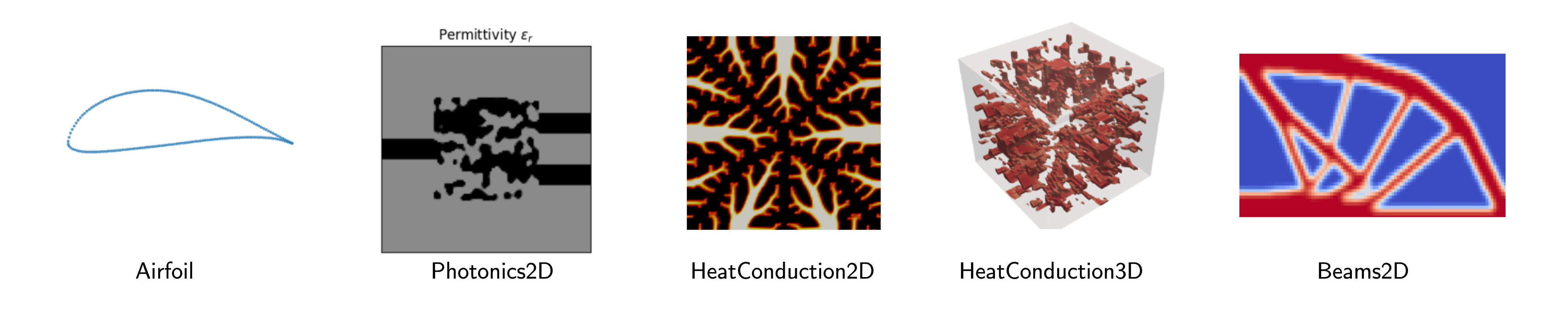

EngiBench

EngiBench offers a collection of engineering design problems, datasets, and benchmarks to facilitate the development and evaluation of optimization and ML algorithms for engineering design. Our goal is to provide a standard API to enable researchers to easily compare and evaluate their algorithms on a wide range of engineering design problems--think wings of aircrafts, beams, heat conduction components. We also give generative algorithms compatible with the API in EngiOpt.

A toolkit for reliable research in MORL

We wrote a few repositories aiming at helping researchers in reproducing results of existing MORL algorithms as well as facilitating the whole research process by providing clean implementations and examples. By making this public, our hope is to attract even more people to the MORL field and remove boilerplate from the research process. A paper describing the whole toolkit has been published at NeurIPS23.MO-Gymnasium

MORL-baselines

MORL-Baselines is a repository containing multiple MORL algorithms using MO-Gymnasium. We aim to provide clean, reliable and validated implementations as well as tools to help in the development of such algorithms. Features include automated experiments tracking for reproducibility, hyper-parameter optimization, multi-objective metrics, and more.

MORL-Baselines is a repository containing multiple MORL algorithms using MO-Gymnasium. We aim to provide clean, reliable and validated implementations as well as tools to help in the development of such algorithms. Features include automated experiments tracking for reproducibility, hyper-parameter optimization, multi-objective metrics, and more.

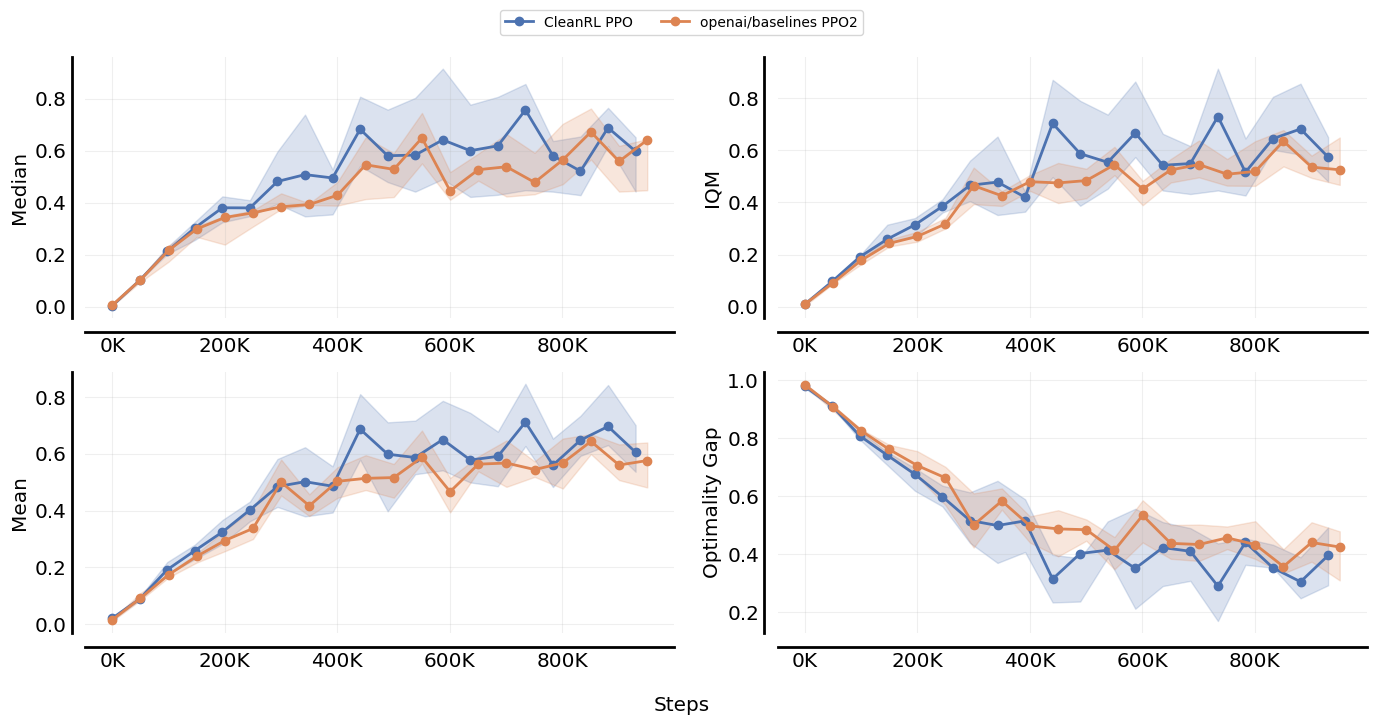

Open RL Benchmark

OpenRLBenchmark is a comprehensive collection of tracked experiments for RL. It aims to make it easier for RL practitioners to pull and compare all kinds of metrics from reputable RL libraries like Stable-baselines3, Tianshou, CleanRL, and MORL-Baselines of course 😎.

OpenRLBenchmark is a comprehensive collection of tracked experiments for RL. It aims to make it easier for RL practitioners to pull and compare all kinds of metrics from reputable RL libraries like Stable-baselines3, Tianshou, CleanRL, and MORL-Baselines of course 😎.

MOMAland

MOMAland is a standard MOMARL API and suite of environments. Basically a multi-agent version of MO-Gymnasium, or a multi-objective version of PettingZoo 🙂. Also integrated into the Farama toolkit.

MOMAland is a standard MOMARL API and suite of environments. Basically a multi-agent version of MO-Gymnasium, or a multi-objective version of PettingZoo 🙂. Also integrated into the Farama toolkit.

Paper: MOMAland: A Set of Benchmarks for Multi-Objective Multi-Agent Reinforcement Learning

CrazyRL

CrazyRL is a MOMARL library under a multi-objective extension of the PettingZoo API. It allows to learn swarm behaviours in a variety of environments, such as the one shown on the left. We also implemented the full MOMARL loop on GPU using Jax, allowing to train agents 2000x faster than when the environment runs on the CPU. Check the video on YouTube.

CrazyRL is a MOMARL library under a multi-objective extension of the PettingZoo API. It allows to learn swarm behaviours in a variety of environments, such as the one shown on the left. We also implemented the full MOMARL loop on GPU using Jax, allowing to train agents 2000x faster than when the environment runs on the CPU. Check the video on YouTube.